Automated requests and Cloudflare's TLS fingerprinting

My (initially unsuccessful) attempts to fetch a RSS feed via curl

When making automated requests to a RSS feed, I recently came across very peculiar behaviour. The RSS feed (lawgazette.com.sg/feed/) would load just fine in my browser (Firefox) but not when I used curl, e.g.

> curl https://lawgazette.com.sg/feed/

<!DOCTYPE html><html lang="en-US"><head><title>Just a moment...</title><meta http-equiv="Content-Type" content="text/html; charset=UTF-8"><meta http-equiv="X-UA-Compatible" content="IE=Edge"><meta name="robots" content="noindex,nofollow"><meta name="viewport" content="width=device-width,initial-scale=1"><link href="/cdn-cgi/styles/challenges.css" rel="stylesheet"></head><body class="no-js"><div class="main-wrapper" role="main"><div class="main-content"><noscript><div id="challenge-error-title"><div class="h2"><span class="icon-wrapper"><div class="heading-icon warning-icon"></div></span><span id="challenge-error-text">Enable JavaScript and cookies to continue</span></div></div></noscript></div></div><script>

// blah blah blah, you get the idea

<script defer src="https://static.cloudflareinsights.com/beacon.min.js/v8b253dfea2ab4077af8c6f58422dfbfd1689876627854" integrity="sha512-bjgnUKX4azu3dLTVtie9u6TKqgx29RBwfj3QXYt5EKfWM/9hPSAI/4qcV5NACjwAo8UtTeWefx6Zq5PHcMm7Tg==" data-cf-beacon='{"rayId":"812eb66bb8535c33","version":"2023.8.0","b":1,"token":"ede0727b61e541778f23feef22f36592","si":100}' crossorigin="anonymous"></script>

</body></html>%The unminified HTML returned above is Cloudflare's challenge page.

Investigation

Pretty simple, I thought, Cloudflare is probably running all sorts of 'real browser' checks, e.g. calling HTML canvas APIs, checking what fonts are installed, looking at the user agent, etc. But then I noticed that I had the JShelter browser extension enabled, which thwarts most fingerprinting attempts. Odd.

If I had been ssh'ed into a VPS, I would have chalked it up to IP address blacklisting or greylisting, but I was calling curl from my own residential IP address.

Since the page I was loading was a RSS feed, I popped it into a desktop RSS reader, Fluent Reader, just to check, fully expecting that the HTTP request sent by the RSS reader would trigger the Cloudflare challenge. But the feed loaded just fine. Very odd.

At this point, I recalled that Fluent Reader was an Fluent Reader so it was possible that the request was being sent via headless Chrome, which might pass the 'real browser' checks. It seemed unlikely the RSS feed was being fetched in the frontend headless Chrome layer rather than the Node.JS backend, not least because the former would give rise to CORS issues, but I decided to try fetching the RSS feed in a non-Electron app, QuiteRSS, just to be sure.

It worked again. Very odd. Perhaps there was some kind of secret HTTP header sent by RSS clients? I ran mitmproxy to intercept the HTTP requests sent by QuiteRSS and began to investigate.

As far as I could tell, the HTTP requests being sent by QuiteRSS and my own HTTP requests via curl were identical. Save for minor differences in the TLS version. Surely not? I added the --tlsv1.3 flag to force curl to use TLS 1.3. Nope, still didn't work.

Resolution

But that got me thinking. Maybe there were minute differences in the way TLS was implemented in curl and in web browsers like Chrome and Firefox that Cloudflare was taking advantage of to identify curl requests and show challenge pages in response.

Fortunately for me, I am far from the first person to be plagued by this issue, and others far more talented than I had already implemented a solution: lwthiker/curl-impersonate, which is a derivative of curl that performs HTTP and TLS handshakes identical to that of Chrome and Firefox. I installed the dependencies, downloaded the binary, and made my HTTP request:

sudo apt install -y libnss3 nss-plugin-pem ca-certificates

curl-impersonate-ff -H 'Accept: application/atom+xml,application/rss+xml;q=0.9,application/xml;q=0.8,text/xml;q=0.7,*/*;q=0.6' -H 'User-Agent: Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:109.0) Gecko/20100101 Firefox/118.0' https://lawgazette.com.sg/category/notices/disciplinary-tribunal-reports/feed/It worked beautifully.

Whitelisting

It looks like most major RSS readers (and other 'bots') don't have to use this workaround because they are whitelisted by Cloudflare.1 Aside from the usual suspects (Googlebot, Bingbot, etc.) there are also entries from other tech solution providers such as OpenAI, Better Uptime, Slack, and Telegram, as well as RSS readers such as Feedly, Feeder, and Feedbin. Under this system, 'friendly bots' to be submitted to Cloudflare to be whitelisted after verification.

This is fine, but should there be a need to get Cloudflare's permission before fetching a RSS feed? Since the verification methods that Cloudflare supports for validating traffic are reverse DNS and IP range whitelisting only, the system doesn't seem to allow for whitelisting of client-side solutions that run on the user's machine such that the IP address cannot be pre-determined.

Looking beyond

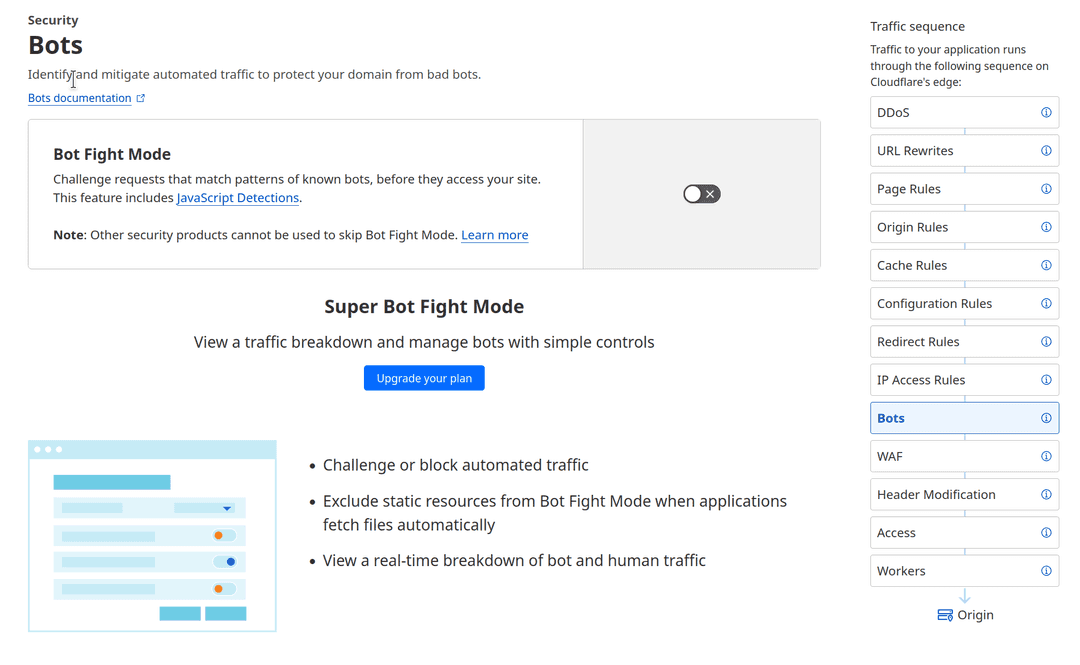

Separately, what remains unclear to me is why a challenge page was shown for this particular RSS feed. Many sites are behind Cloudflare these days (including this one), and Cloudflare doesn't show a challenge page for vanilla curl requests to my feed, so why that one? My suspicion is that it has something to do with the Cloudflare security configuration for that site:

Maybe it has 'Bot Fight Mode' or Cloudflare's general 'Under Attack' mode turned on? The showing of the challenge page is clearly not intentional — it's a RSS feed; it's meant to be fetched in an automated fashion, not read in a browser directly. It's not difficult to get around this at the moment, but there shouldn't be a need for developers to play this sort of cat-and-mouse game for non-malicious applications.2

Considering how widespread usage of Cloudflare (and other similar solutions such as Akamai) is, there does appear to be a risk to RSS as a technology as well as to the open web more broadly if this sort of blocking, solely based on the nature of the request, becomes the default option or the recommended option on the basis of 'security'.

- This list is not exhaustive.↩

- This unintended blocking of RSS feeds as a result of heavy-handed anti-DDoS and anti-bot measures has also been highlighted by others. See e.g. Kevin Cox, "Problems with Cloudflare Bot Blocking" (2021), this, etc.↩